AI has matured enough to become part of your digital core. Now that 88% of organizations have adopted AI in at least one business function, the bigger risk is ignoring AI’s upside.

So today's CFOs face a new challenge. How do you balance the stability an ERP provides with the innovation that new AI tools promise? And which implementations deserve your trust when every new tool promises to revolutionize the industry?

To make the right call, look under the hood. Understanding the architecture reveals which AI platforms deliver results without compromising rigor.

A look at what’s changed

‘AI,’ as we currently know it, usually refers to large language models, or LLMs. Language models have existed since the 1990s. These models could read one word (or number) at a time, from left to right, like reading a book with your finger tracking each word.

In 2017, something fundamental changed. Google researchers invented the ‘transformer’ architecture, which now underpins the LLMs that have become household names, like ChatGPT. The transformer architecture enables a language model to take any ordered sequence of information (words, numbers, code) and learn patterns with it. Old language models were dial-up. Transformers are fiber.

Today’s LLMs blend your institutional knowledge with the world’s public data, making connections no earlier system could, without a single line of custom code. Powerful, yes. But for business users, that power cuts both ways.

Know the risks

Accuracy

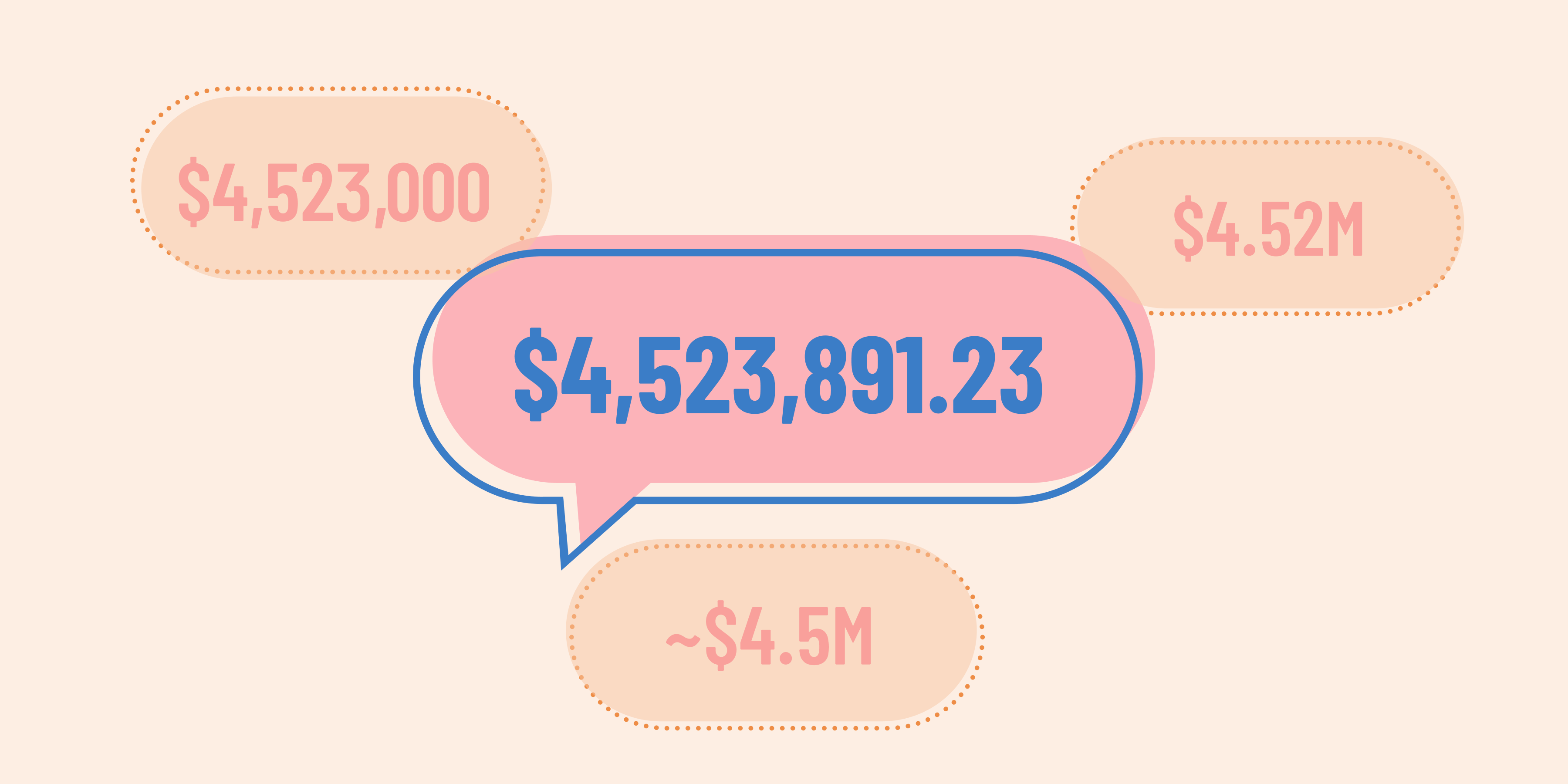

One primary concern for CFOs is that LLMs don't calculate, they generate probable answers.

Consider a simple query: What was revenue in Q3 for EMEA? Your ERP will run traditional deterministic code that returns the same result every time: $4,523,891.23. If you run the request three times in ChatGPT, the first query might return ~$4.5M, the next returns approximately $4,523,000, and the third returns $4.52M. The number varies slightly, the format changes, and occasionally it might hallucinate entirely.

And these risks aren’t theoretical: Deloitte recently embarrassed itself when a report it prepared for the Australian government was found to contain several AI-generated errors. Beyond the six-figure fine, Deloitte’s own research from 2023 found that a severe reputational crisis can wipe out 30% of a company’s market value within days.

Security

CFOs are also wise to be wary of security risks. Ninety-seven percent of organizations IBM studied suffered an AI-related security incident. And the average cost of a data breach? $4.4M.

In 2023, Samsung banned the use of generative AI after discovering an engineer used ChatGPT as a debugging tool, exposing sensitive internal source code to third parties. That’s because generic LLMs use every input to further train the model. The second you input data into the chat, you no longer own it.

Without proper constraints like access controls and governance policies, using external AI services with financial data may violate Sarbanes Oxley (SOX) requirements, GDPR, or industry-specific regulations that require data to stay within controlled environments with proper audit trails.

Cost

Beyond the cost of reputational damage and security breaches, there’s the mounting financial burden of AI itself. Cloud spend is already painful. AI multiplies it. Every query, every refresh, every report runs up usage fees. And the current pricing is artificially low. Venture capital and private equity money have subsidized AI tool subscriptions to drive adoption. Rate increases are inevitable.

Architecture is everything

With recent advances, CFOs can now leverage AI's strengths without exposing financial data to its weaknesses.

The distinction between "AI-powered" and "AI-native" matters enormously here. Many vendors slap AI onto existing systems without rethinking how those systems work. They're wrapping a ChatGPT-style interface around decades-old data models and calling it innovation. The result is consumer-grade models making financial decisions. The same LLMs trained on Reddit threads and Wikipedia entries are now generating your revenue recognition schedules.

AI-native architecture is purpose-built. It’s designed from the beginning with the data structures, security boundaries, and governance frameworks you need to use AI safely in your financial operations.

These systems work differently in three ways:

1. Structured data retrieval

AI-native tools like Everest query structured financial data first using traditional, deterministic methods. The LLM interprets the question, but the database does the math.

Taking our earlier example, you can use plain English to ask the tool for Q3 EMEA revenue. The LLM translates your natural language into a query, and then writes code to perform the operation—either in SQL or Python. Everest then uses that code to return an answer to your employee.

This is a crucial innovation. Once you’ve verified that code returns the proper numbers, you then own the code. Each time you query the AI, it simply uses the already proven code—it doesn’t reinvent things each time. To your employees, it simply means they get consistent, accurate, reliable answers.Plus, they aren’t charged AI usage credits every time they re-run that report.

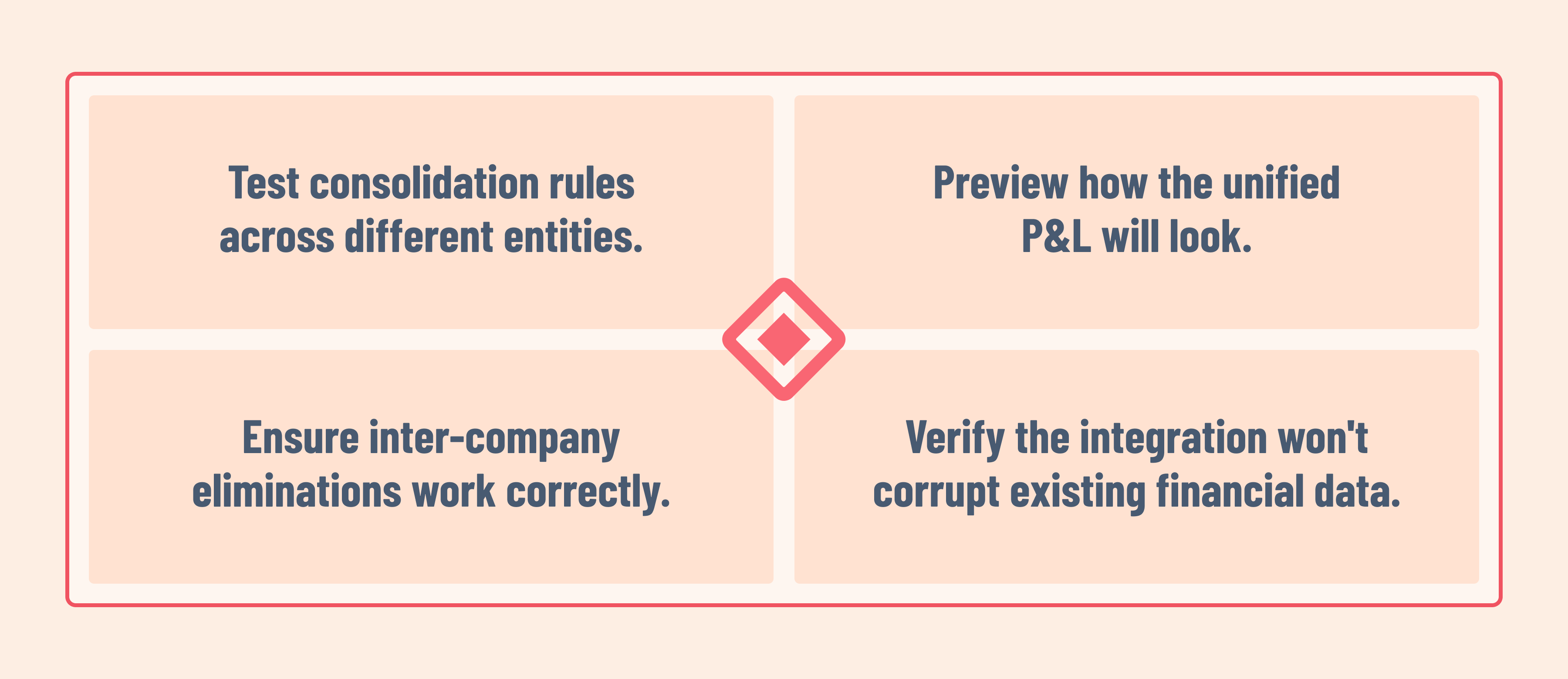

2. Sandbox environments

Sandboxing gives your finance team a safe, isolated test environment in which to try things. It allows them to de-risk system changes the way an engineer ships code updates. And with Everest, that sandbox uses real production data, so the results are accurate.

Imagine you’ve acquired a company and your team needs to integrate its books. Instead of waiting months for IT to test and implement the changes, sandboxing allows your team to:

Test consolidation rules across different entities.

Preview how the unified P&L will look.

Ensure inter-company eliminations work correctly.

Verify the integration won't corrupt existing financial data.

Instead of deferring decisions and fretting over potential errors, you can publish changes confidently.

3. AI-generated applications

When an ERP is built with a unified data model and structured to allow AI to see everything, you can use AI to create “ephemeral apps.” These are bite-sized, low-risk, task specific “apps” to run a report or conduct an analysis. Each capability is confined to its single, well-defined process, like invoice matching. It deploys only where it is explicitly authorized to operate, and nothing writes directly to the ledger without passing through established rules and approvals.

Because each AI unit behaves like what developers call an isolated “microservice,” they can be monitored, versioned, or shut off without risk to the broader environment.

For example, Brady Nikolas, senior accountant at the software startup Spectora, uses Everest’s AI agents to run reports for him. He’s found that they can instantly generate pre-structured reports that used to take him hours. As a result, he’s a much better partner to functional leaders who want to know about their expenses and budgets.

It’s time for CFOs to move from caution to confidence

Understandably, CFOs have been wary of AI. A financial leader we spoke with captured the hesitation: “ Native AI…I am not sure yet, just because, yeah.” But that’s yesterday’s wisdom. The question has shifted from, ‘Should finance use AI?’ to,‘Which AI deserves to be my financial core?’

AI-native ERPs built with financial rigor in mind free CFOs to function as strategic partners rather than stewards of historical data. These systems deliver:

Speed without fragility. Support new models—subscription pricing, usage-based billing—without months of implementation work. Respond to regulatory changes without custom code. Integrate best-of-breed tools without middleware sprawl.

Insight without risk. Queries return facts, not approximations. Automation genuinely reduces work instead of just redistributing it to different team members. Reports run consistently and deliver numbers you can present in board meetings without hedging.

AI belongs in your ERP—but only if it's built to work the way financial systems must—with precision, consistency, and the ability to prove both.